Generative AI, particularly text-to-image AI, is attracting as many lawsuits as it is venture dollars.

Two companies behind popular AI art tools, Midjourney and Stability AI, are entangled in a legal case that alleges they infringed on the rights of millions of artists by training their tools on web-scraped images. Separately, stock image supplier Getty Images took Stability AI to court for reportedly using images from its site without permission to train Stable Diffusion, an art-generating AI.

Generative AI’s flaws — a tendency to regurgitate the data it’s trained on and, relatedly, the makeup of its training data — continues to put it in the legal crosshairs. But a new startup, Bria, claims to minimize the risk by training image-generating — and soon video-generating — AI in an “ethical” way.

“Our goal is to empower both developers and creators while ensuring that our platform is legally and ethically sound,” Yair Adato, the co-founder of Bria, told TechCrunch in an email interview. “We combined the best of visual generative AI technology and responsible AI practices to create a sustainable model that prioritizes these considerations.”

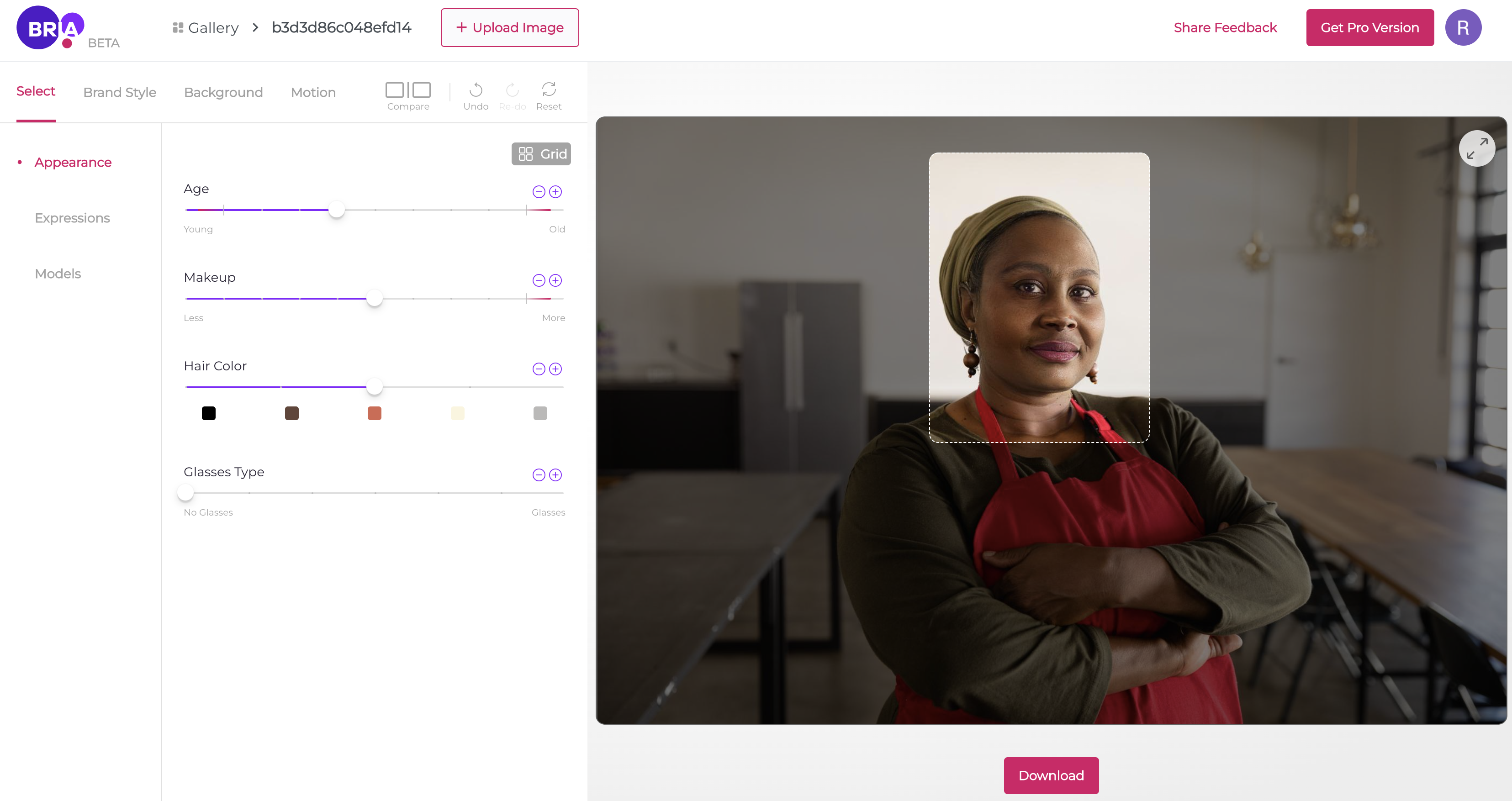

Image Credits: Bria

Adato co-founded Bria when the pandemic hit in 2020, and the company’s other co-founder, Assa Eldar, joined in 2022. During Adato’s Ph.D. studies in computer science at Ben-Gurion University of the Negev, he says he developed a passion for computer vision and its potential to “improve” communication through generative AI.

“I realized that there’s a real business use case for this,” Adato said. “The process of creating visuals is complex, manual and often requires specialized skills. Bria was created to address this challenge — providing a visual generative AI platform tailored to enterprises that digitizes and automates this entire process.”

Thanks to recent advancements in the field of AI, both on the commercial and research side (open source models, the decreasing cost of compute, and so on), there’s no shortage of platforms that offer text-to-image AI art tools (Midjourney, DeviantArt, etc.). But Adato claims that Bria’s different in that it (1) focuses exclusively on the enterprise and (2) was built from the start with ethical considerations in mind.

Bria’s platform enables businesses to create visuals for social media posts, ads and e-commerce listings using its image-generating AI. Via a web app (an API is on the way) and Nvidia’s Picasso cloud AI service, customers can generate, modify or upload visuals and optionally switch on a “brand guardian” feature, which attempts to ensure their visuals follow brand guidelines.

The AI in question is trained on “authorized” datasets containing content that Bria licenses from partners, including individual photographers and artists, as well as media companies and stock image repositories, which receive a portion of the startup’s revenue.

Bria isn’t the only venture exploring a revenue-sharing business model for generative AI. Shutterstock’s recently launched Contributors Fund reimburses creators whose work is used to train AI art models, while OpenAI licensed a portion of Shutterstock’s library to train DALL-E 2, its image generation tool. Adobe, meanwhile, says that it’s developing a compensation model for contributors to Adobe Stock, its stock content library, that’ll allow them to “monetize their talents” and benefit from any revenue its generative AI technology, Firefly, brings in.

But Bria’s approach is more extensive, Adato tells me. The company’s revenue share model rewards data owners based on their contributions’ impact, allowing artists to set prices on a per-AI-training-run basis.

Adato explains: “Every time an image is generated using Bria’s generative platform, we trace back the visuals in the training set that contributed the most to the [generated art], and we use our technology to allocate revenue among the creators. This approach allows us to have multiple licensed sources in our training set, including artists, and avoid any issues related to copyright infringement.”

Image Credits: Bria

Bria also clearly denotes all generated images on its platform with a watermark and provides free access — or so it claims, at least — to nonprofits and academics who “work to democratize creativity, prevent deepfakes or promote diversity.”

In the coming months, Bria plans to go a step further, offering an open source generative AI art model with a built-in attribution mechanism. There’s been attempts at this, like Have I Been Trained? and Stable Attribution, sites that make a best effort to identify which art pieces contributed to a particular AI-generated visual. But Bria’s model will allow other generative platforms to establish similar revenue sharing arrangements with creators, Adato says.

It’s tough to put too much stock into Bria’s tech given the nascency of the generative AI industry. It’s unclear how, for example, Bria is “tracing back” visuals in the training sets and using this data to portion out revenue. How will Bria resolve complaints from creators who allege they’re being unfairly underpaid? Will bugs in the system result in some creators being overpaid? Time will tell.

Adato exudes the confidence you’d expect from a founder despite the unknowns, arguing Bria’s platform ensures each contributor to the AI training datasets gets their fair share based on usage and “real impact.”

“We believe that the most effective way to solve [the challenges around generative AI] is at the training set level, by using a high-quality, enterprise-grade, balanced and safe training set,” Adato said. “When it comes to adopting generative AI, companies need to consider the ethical and legal implications to ensure that the technology is used in a responsible and safe manner. However, by working with Bria, companies can rest assured that these concerns are taken care of.”

That’s an open question. And it’s not the only one.

What if a creator wants to opt out of Bria’s platform? Can they? Adato assures me that they’ll be able to. But Bria uses its own opt-out mechanism as opposed to a common standard such as DeviantArt‘s or artist advocacy group Spawning‘s, which offers a website where artists can remove their art from one of the more popular generative art training data sets.

That raises the burden for content creators, who now have to potentially worry about taking the steps to remove their art from yet another generative AI platform (unless of course they use a “cloaking” tool such as Glaze, rendering their art untrainable). Adato doesn’t see it that way.

“We’ve made it a priority to focus on safe and quality enterprise data collections in the construction of our training sets to avoid biased or toxic data and copyright infringement,” he said. “Overall, our commitment to ethical and responsible training of AI models sets us apart from our competitors.”

Those competitors include incumbents like OpenAI, Midjourney and Stability AI, as well as Jasper, whose generative art tool, Jasper Art, also targets enterprise customers. The formidable competition — and open ethical questions — doesn’t seem to have scared away investors, though — Bria has raised $10 million in venture capital to date from Entrée Capital, IN Venture, Getty Images and a group of Israeli angel investors.

Image Credits: Bria

Adato said that Bria is currently serving “a range” of clients, including marketing agencies, visual stock repositories and tech and marketing firms. “We’re committed to continuing to grow our customer base and provide them with innovative solutions for their visual communication needs,” he added.

Should Bria succeed, part of me wonders if it’ll spawn a new crop of generative AI companies more limited in scope than the big players today — and thus less susceptible to legal challenges. With funding for generative AI starting to cool off, partly because of the high level of competition and questions around liability, more “narrow” generative AI startups just might stand a chance at cutting through the noise — and avoiding lawsuits in the process.

We’ll have to wait and see.